The load balancer of the ingress controller serves as the entry point for external traffic in a Kubernetes cluster, making it an important component to secure. By limiting the IP ranges, we can ensure that only trusted traffic is allowed to reach the cluster. In this blog post, we will explore how to restrict the IP ranges of the load balancer in your ingress controller. I will provide two examples of how to achieve this using the loadBalancerSourceRanges property.

Table of Contents

Use Case

Last week, I did some brainstorming with my colleagues to restrict access to a cluster from the outside. In other words, we aimed to limit ingress traffic to the exposed applications on the cluster, allowing only clients from specific IP ranges to reach them. Basically you have the following options to achieve this:

- Configure appropriate firewall rules in your VPC: The rules should allow only certain IP ranges to access the IP of your service of type

LoadBalancer. - Network policies: With network policies you can restrict ingress-traffic of pods. In theory, this also works for traffic that comes from outside of the cluster to the ingress controller pods.

- Ingress controller specific annotations: Some ingress controllers, such as the NGINX ingress controller, provide annotations that allow you to specify allowed IP source ranges for clients.

loadBalancerSourceRangesfield in thespecof a Kubernetes service of typeLoadBalancer: You can specify a list of IP CIDR (Classless Inter-Domain Routing) ranges that are allowed to access the load balancer using the field.

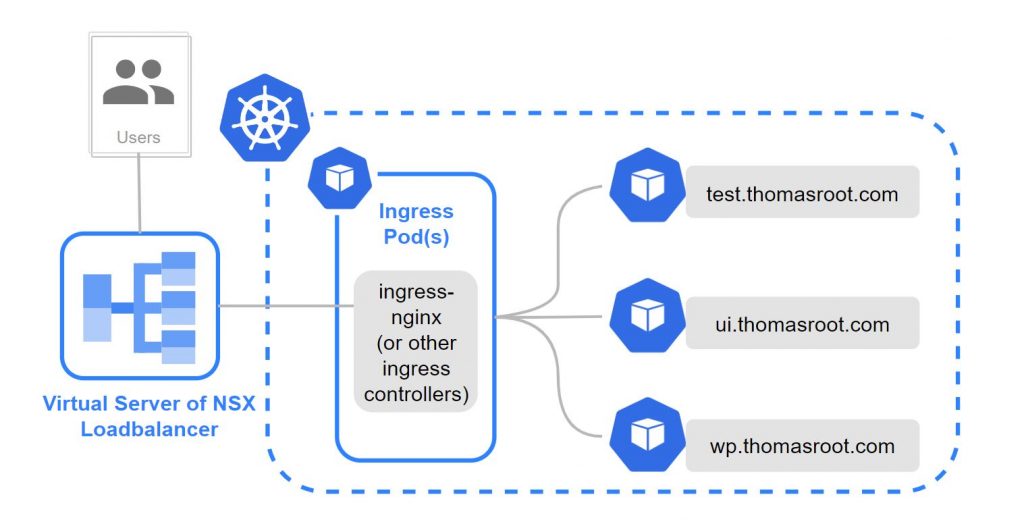

Our setup is an on-premises Kubernetes cluster provided by a Tanzu Kubernetes Grid Integrated installation with NSX network virtualization. The cluster includes some sample apps which are exposed to the external network through an NGINX ingress controller. The creation of the NGINX ingress controller automatically generates a Kubernetes service of type LoadBalancer. This is where the NSX Container Plugin (NCP) CNI driver comes into play, triggering the creation of a VirtualServer instance in NSX in the background.

Option 1: Configure appropriate firewall rules in your VPC

In our case it is necessary to configure the restriction on the Kubernetes layer with Kubernetes default capabilities. Hence, option 1, which involves restricting incoming traffic through firewall rules in the VPC, is not feasible for our setup.

Option 2: Network policies

At first we thought network policies is the way to go. However, upon examining the logs of the NGINX ingress controller, we discovered that the IP address of the client sending the request was different from the client that actually sent the request. After conducting some research, we learned that this was a by-design behavior of NSX and the NSX Container Plugin (NCP) in the cluster. Whether the source IP of the client request is preserved depends on the implementation of the cloud provider. In short: Some load balancers act as packet forwarders and preserve the client IP, while others act as proxies and replace the original client IP with their own. In our NSX setup, the source IP is replaced by the IP of the tier-1 router’s uplink port. If it works with network policies or not depends totally on your setup!

The following network policy is a whitelist approach for the ingress traffic in the ingress-nginx namespace. It should drop all incoming traffic to the namespace ingress-nginx except for the ip range 1.1.1.0/24. By the way there is a great network policy editor by Cilium that visualizes how your network policies work. Check it out here!

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: whitelist-ingress-traffic

namespace: ingress-nginx

spec:

podSelector: {}

ingress:

- from:

- ipBlock:

cidr: 1.1.1.0/24

The video below shows a brilliant talk about the networking within Google Container Engine. It contains a really good explanation how a packet finds its way from an external system until it reaches the destination pod.

Option 3: Ingress controller specific annotation

This option wouldn’t work in our environment because, as previously explained, the original client IP is not preserved when the packets pass through the load balancer. Therefor, a whitlisting with ingress annotations is not possible.

Option 4: loadBalancerSourceRanges field

The loadBalancerSourceRanges field in the spec of a Kubernetes service of type LoadBalancer specifies a list of IP CIDR (Classless Inter-Domain Routing) ranges that are allowed to access the load balancer. Basically it is a security feature that is used to restrict access to only necessary/trusted sources. The load balancer will only forward traffic to the pods if it originates from an IP address within the specified source ranges. By default requests from the source 0.0.0.0/0 are allowed if you create a service of type LoadBalancer and don’t specify explicitly the loadBalancerSourceRanges field. That means requests from every source IP address are accepted. If you set the loadBalancerSourceRanges field please make sure that your Cloud Provider or respectively your CNI (Container Networking Interface) driver supports this feature. Otherwise it will be ignored!

In the following sections a will give you a quick overview how to use theloadBalancerSourceRanges field within an on-premises VMware Tanzu Kubernetes Grid Integrated Setup backed by NSX and how to use it in GCP.

On-Premises Example: VMware Tanzu Kubernetes Grid Integrated

CAUTION: Unfortunately, this feature only works if you are already using the Policy API of NSX. This is a mandatory requirement for using the loadBalancerSourceRanges field in a NSX environment! If you are using older Tanzu Kubernetes Grid products like Tanzu Kubernetes Grid Integrated (TKGi), you may still be using the deprecated Manager API of NSX. Other legacy TKG versions, such as TKGm (Tanzu Kubernetes Grid Multicloud) and TKGs (vSphere with Tanzu), may also still be using the Manager API. Since I am only familier with TKGi from the legacy products, I cannot confirm this information 100% for other TKG products. Please check your current setup before using this feature!

I used the following setup for testing the loadBalancerSourceRanges field with Tanzu Kubernetes Grid Integrated (TKGi):

- TKGi 1.14 Installation backed by NSX

- NSX 3.2 (Policy-API only!)

- Ingress NGINX Controller Deployment

You just have to add the loadBalancerSourceRanges field in the spec of the service of type LoadBalancer of the ingress controller:

... spec: loadBalancerSourceRanges: - 1.1.1.0/24 ...

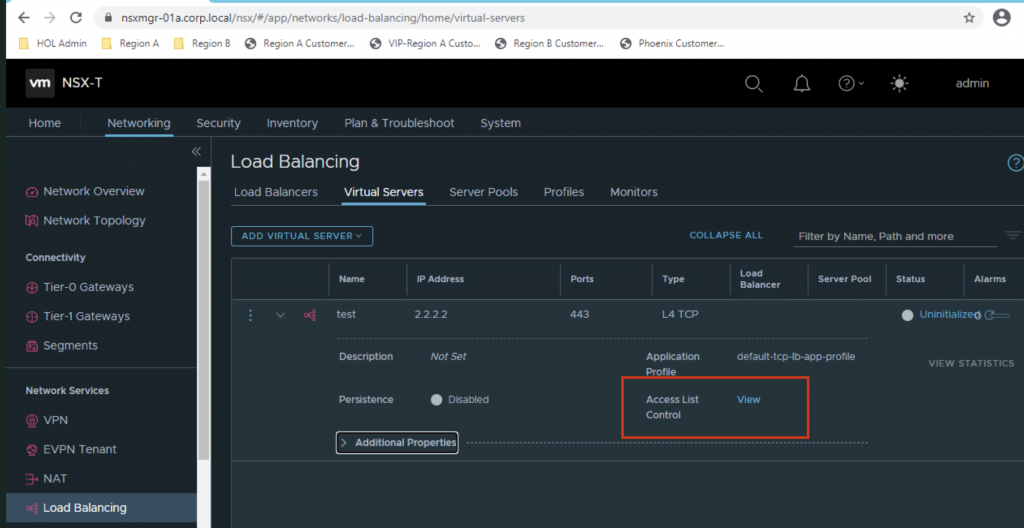

After applying this change to the service resource the NSX virtual server is modified and will only allow traffic from the specified source IP range 1.1.1.0/24. You can verify this in NSX Manager by looking at the virtual server’s Access List Control (see screenshot below). It will allow access to a specific group. This group will contain the IP addresses specified in loadBalancerSourceRanges. You can look at this group by navigating to Inventory > Groups. Please refer to the official docs as well.

Your cluster is now slightly more secure 🙂

Public Cloud Example: Google Kubernetes Engine (GKE)

Here is my setup for testing the loadBalancerSourceRanges field with Google Kubernetes Engine (GKE):

- GKE cluster (in a minimal setup with autopilot and in public network mode)

- Ingress NGINX Controller Deployment

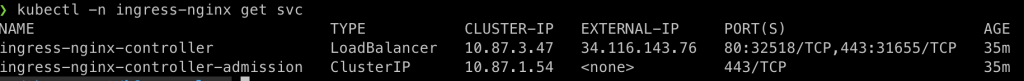

After deploying the ingress nginx controller with helm a service of type load balancer is created. In my case with the external-ip . Without any additional restriction this IP is reachable over the Internet!

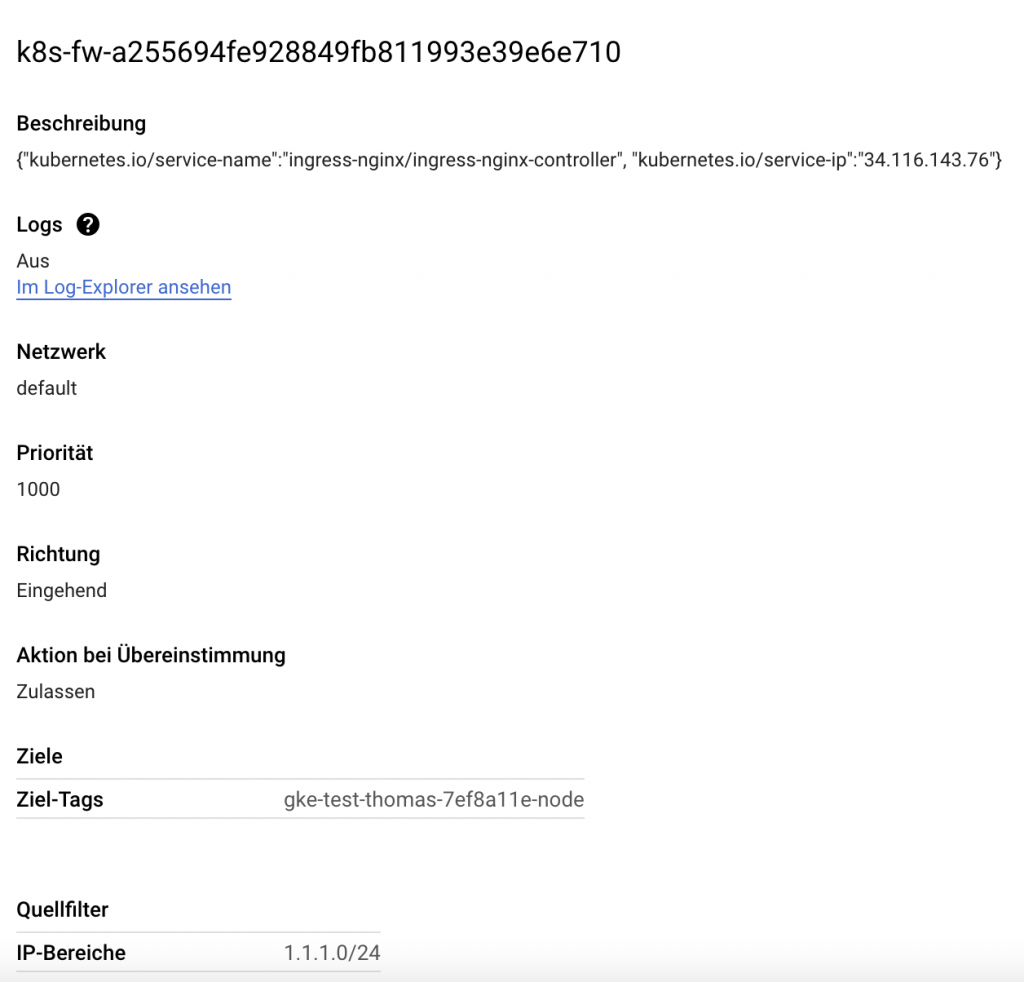

With this configuration, kube-proxy creates the corresponding iptables rules in Kubernetes nodes. GKE also creates a firewall rule in your VPC network automatically.

You just have to add the loadBalancerSourceRanges field in the spec of a Kubernetes service of type LoadBalancer. In the following example I will restrict the allowed source range to 1.1.1.0/24:

apiVersion: v1

kind: Service

metadata:

annotations:

cloud.google.com/neg: '{"ingress":true}'

meta.helm.sh/release-name: ingress-nginx

meta.helm.sh/release-namespace: ingress-nginx

creationTimestamp: "2023-02-03T15:32:00Z"

finalizers:

- service.kubernetes.io/load-balancer-cleanup

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

helm.sh/chart: ingress-nginx-4.4.2

name: ingress-nginx-controller

namespace: ingress-nginx

resourceVersion: "16278"

uid: 255694fe-9288-49fb-8119-93e39e6e7106

spec:

allocateLoadBalancerNodePorts: true

clusterIP: 10.87.3.47

clusterIPs:

- 10.87.3.47

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

loadBalancerSourceRanges:

- 1.1.1.0/24

ports:

- name: http

nodePort: 32518

port: 80

protocol: TCP

targetPort: http

With this configuration, kube-proxy creates the corresponding iptables rules in Kubernetes nodes. After creating/updating the service of type LoadBalancer of your ingress controller you will find a new allow rule in your VPC firewall. Every other client IP that tries to request the apps behind the load balancer will be blocked.

That’s all! Now, only clients within the IP range of 1.1.1.0/24 can reach the load balancer.

This works on pretty every major cloud provider in a similar way: